Peanut Butter and Chocolate

Integrating Hadoop with SQL Server

Who Am I? What Am I Doing Here?

|

Catallaxy Services |  @feaselkl |

|

Curated SQL | |

|

We Speak Linux |

Core Assumptions

To get the most of this, I will assume the following:

- Hadoop is set up in your environment

- You are more familiar with T-SQL (or .NET) than any Hadoop-centric language

- You want to combine use Hadoop in conjunction with SQL Server

Why Integrate?

That the Division of Labour is Limited by the Extent of the Market

As it is the power of exchanging that gives occasion to the division of labour, so the extent of this division must always be limited by the extent of that power, or, in other words, by the extent of the market. When the market is very small, no person can have any encouragement to dedicate himself entirely to one employment, for want of the power to exchange all that surplus part of the produce of his own labour, which is over and above his own consumption, for such parts of the produce of other men’s labour as he has occasion for.

- Adam Smith, The Wealth of Nations, Book I, section III

Why Integrate?

Specialization and division of labor. Works for people, works for data.

SQL Server as a relational engine:

- Provably good transactional systems (ACID compliance, normal forms, constraints, etc.)

- Quick return times for application interfaces

- Warehousing: answering known business questions

- Great model with a lot of talented people

Why Integrate?

Specialization and division of labor. Works for people, works for data.

Hadoop as a processing engine:

- Aggregation of log data

- Allocation and batch processing

- Processing non-relational data (e.g., genetic data)

- Text processing

- "Amorphous" data

- Spelunking: answering unknown business questions

Goals

- Show several methods for integrating Hadoop with SQL Server.

- Begin to show the value of this integration.

- Take a glimpse into the world of data over the next several years.

- Shoehorn economics and baseball into a technical discussion.

Integration Methods

- Ambari

- Sqoop

- .NET

- SSIS

- Linked Servers

- Polybase

Ambari

Basic Idea

Ambari is a framework for monitoring and administering a Hadoop cluster.

It includes tools to maintain HDFS clusters, Hive tables, and much more.

Ambari

When To Use

Use Ambari for one-off, occasional work. This is the easiest method, but also the most time-consuming.

Ambari

Basic Steps

- Create files and folders in HDFS

- Upload files into HDFS

- Create and query Hive tables

- Output Hive results to files

Ambari

Demo Time

Scroll down for image-heavy demo.

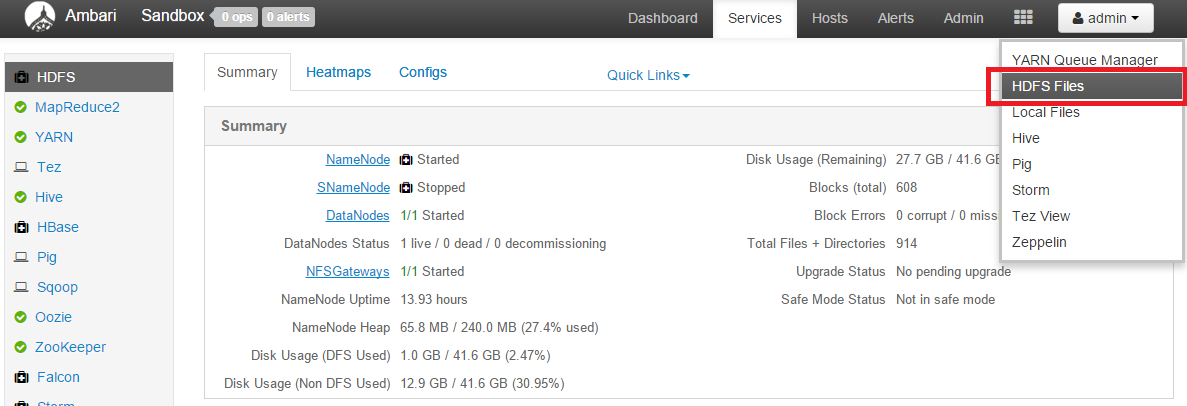

Ambari

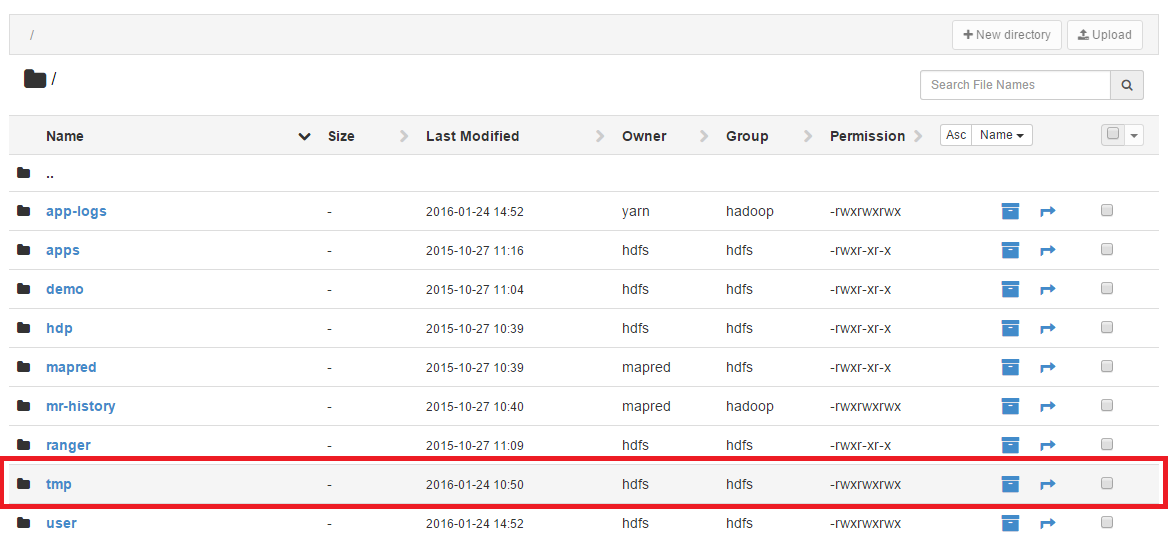

Open HDFS Files.

Ambari

Go to the tmp folder.

Ambari

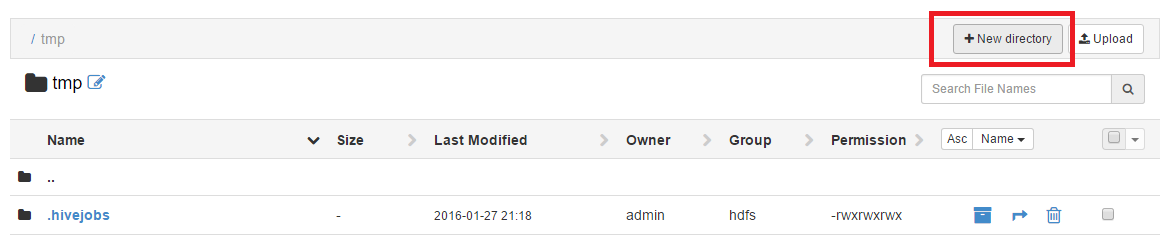

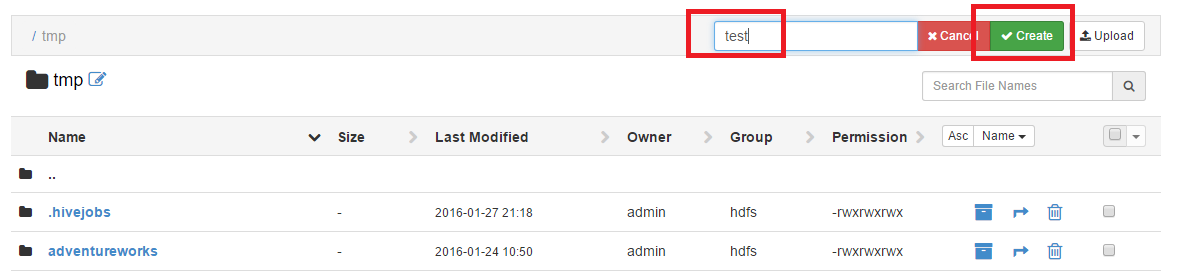

Create a new directory.

Ambari

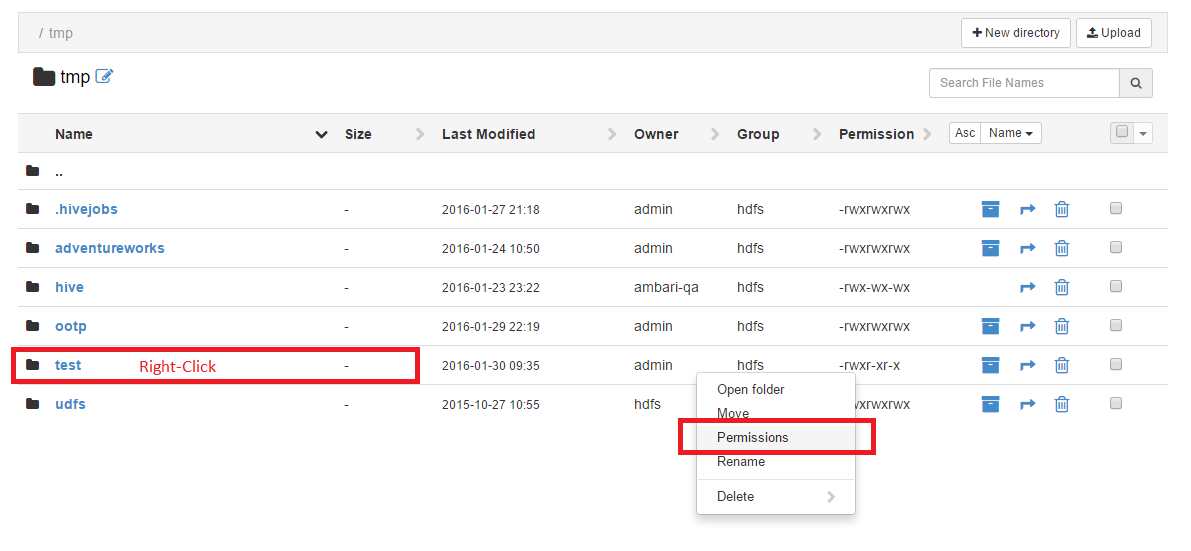

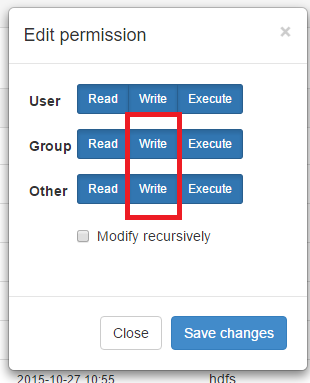

Set folder permissions.

Ambari

Set folder permissions. For production, don't indiscriminately do this, of course...

Ambari

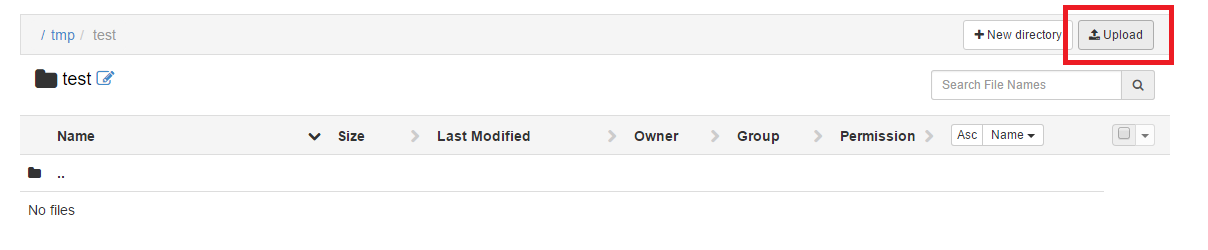

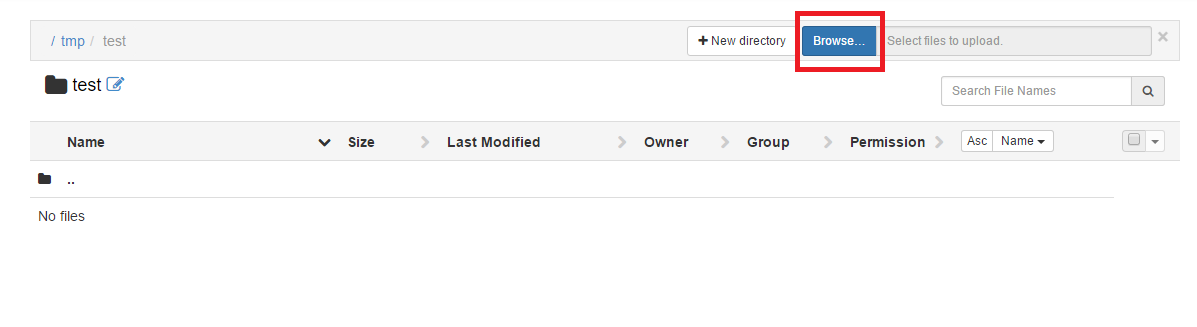

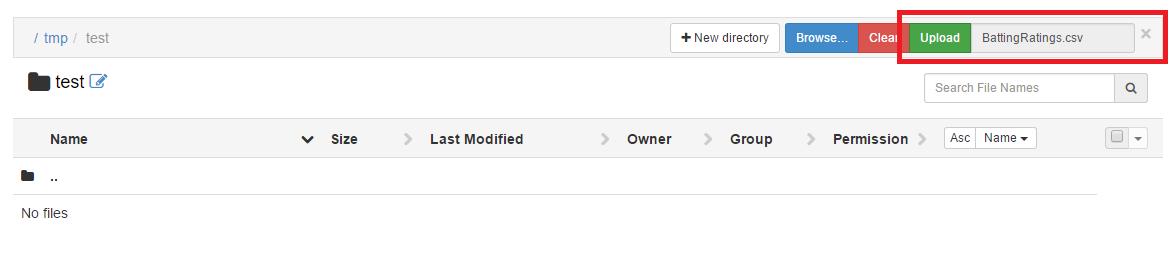

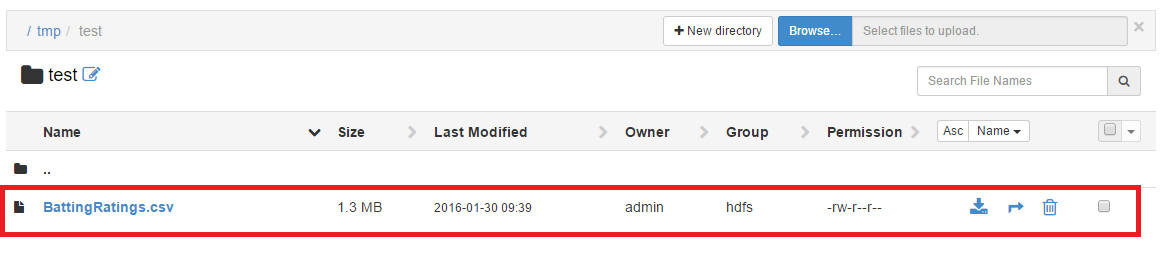

Upload a file.

Ambari

Upload a file.

Ambari

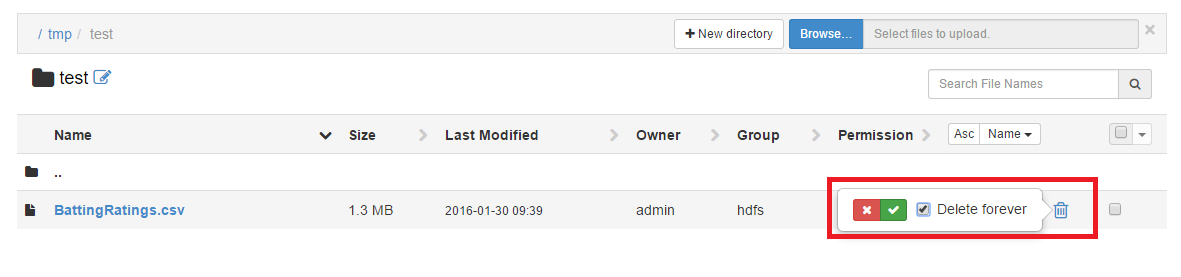

Delete a file.

Ambari

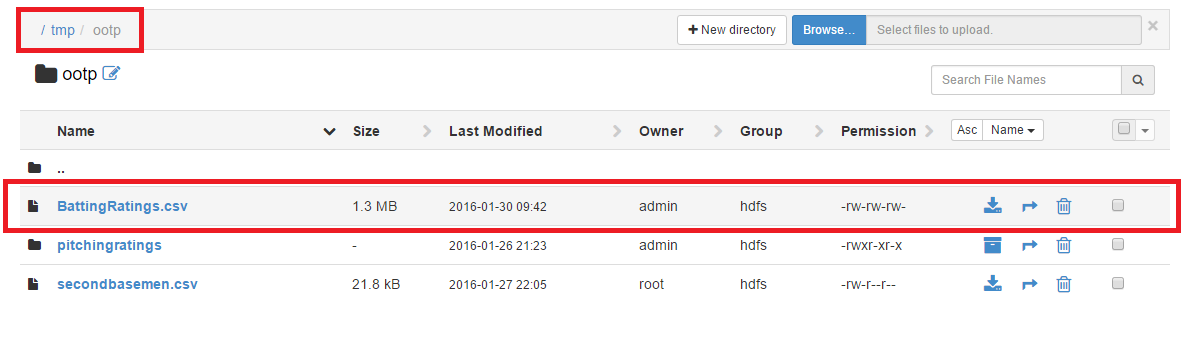

For Hive work, copy BattingRatings.csv to /tmp/ootp. Make sure that /tmp/ootp and the file both have write turned on.

Ambari

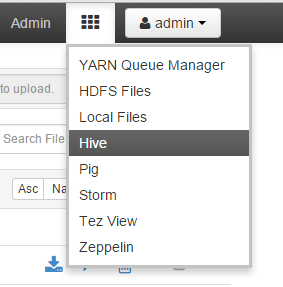

Open up Hive.

Ambari

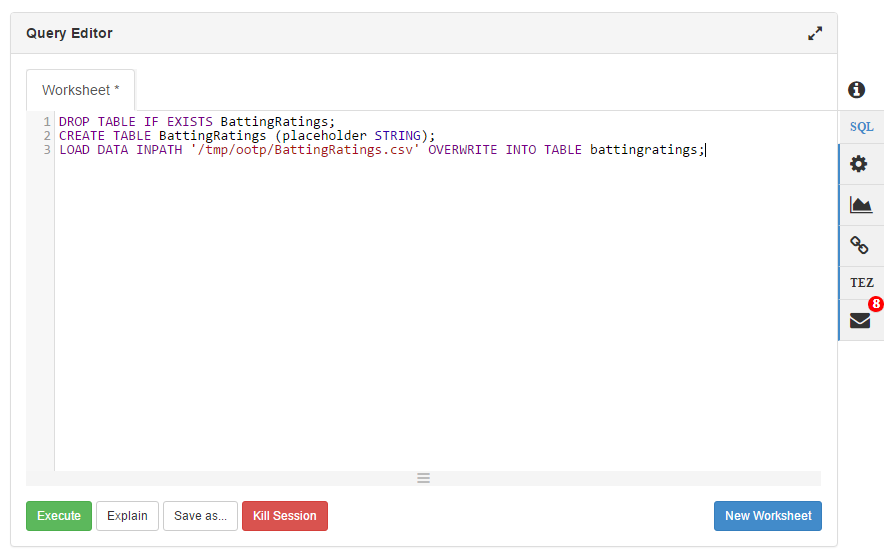

Create the BattingRatings table.

Ambari

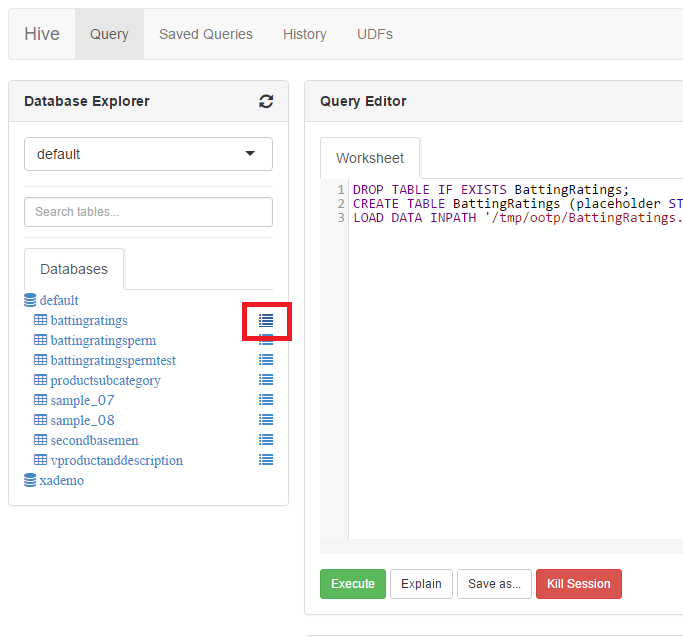

Select top 100 rows from the BattingRatings table.

Ambari

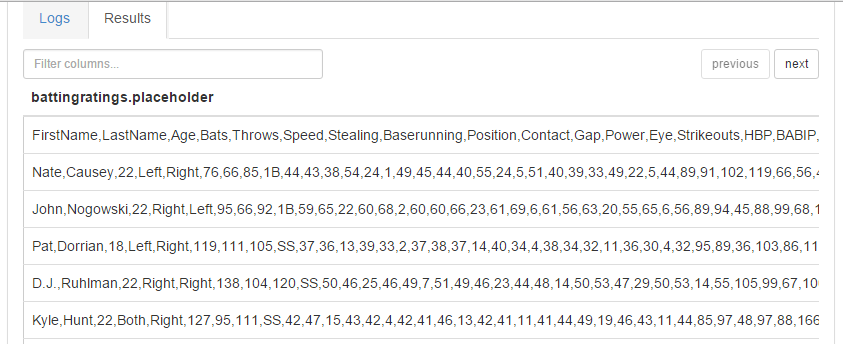

BattingRatings table results.

Ambari

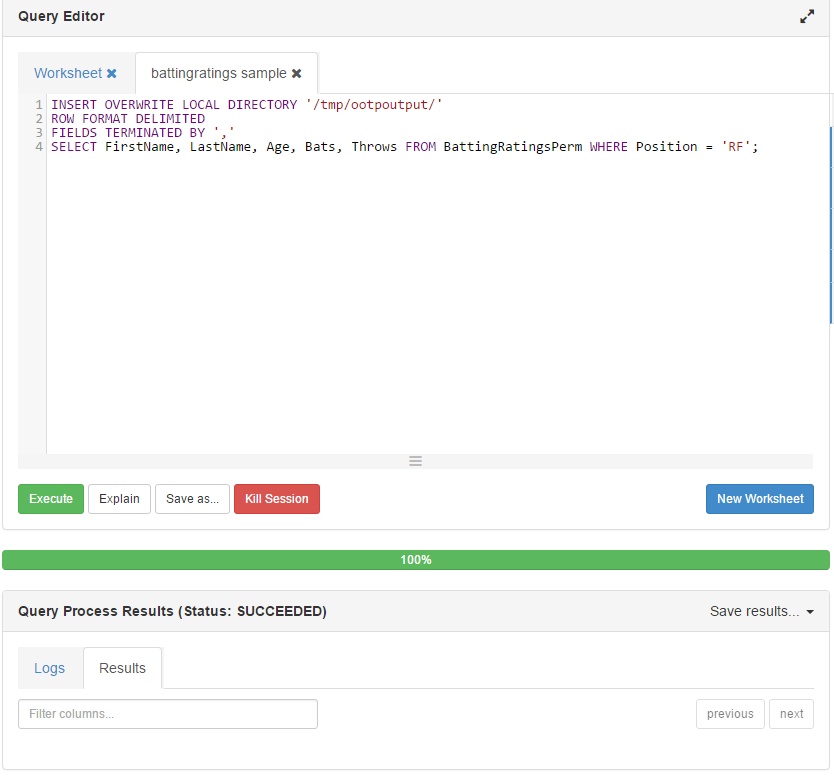

Write results to output to load into SQL Server.

Ambari

Write Hive query to file. Run the BattingRatingsPerm script first.

Ambari

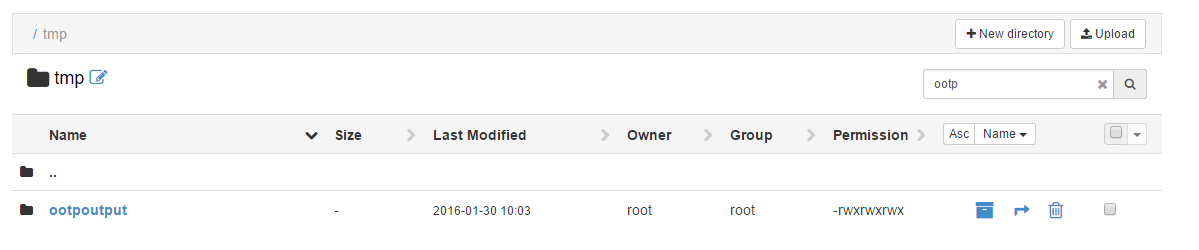

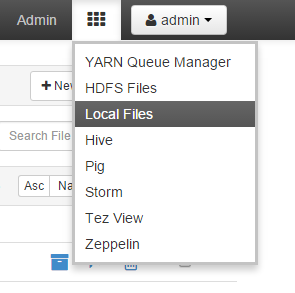

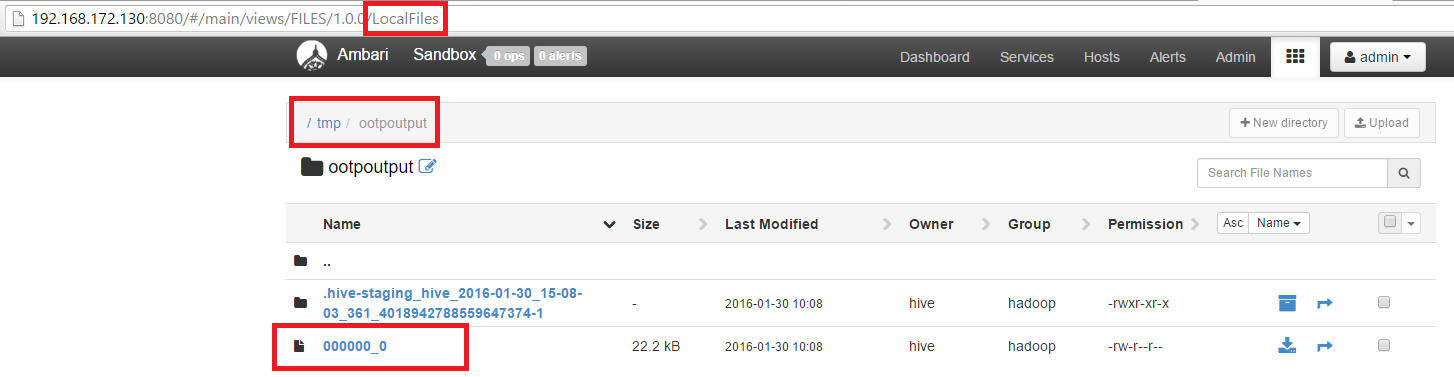

The output is LOCAL files, not HDFS. We specified that in the query.

Ambari

Our output file is in /tmp/ootpoutput.

Integration Methods

- Ambari

- Sqoop

- .NET Program

- SSIS

- Linked Servers

- Polybase

Sqoop

Sqoop is a quick-and-easy console program designed to ingest data from database servers (like SQL Server) into Hadoop and also push data back to database servers.

Pre-req: install the Microsoft JDBC driver.

Sqoop

When To Use

Sqoop is good for loading entire tables/databases into Hadoop and loading staging tables into SQL Server.

Sqoop

Demo Time

Scroll down for image-heavy demo.

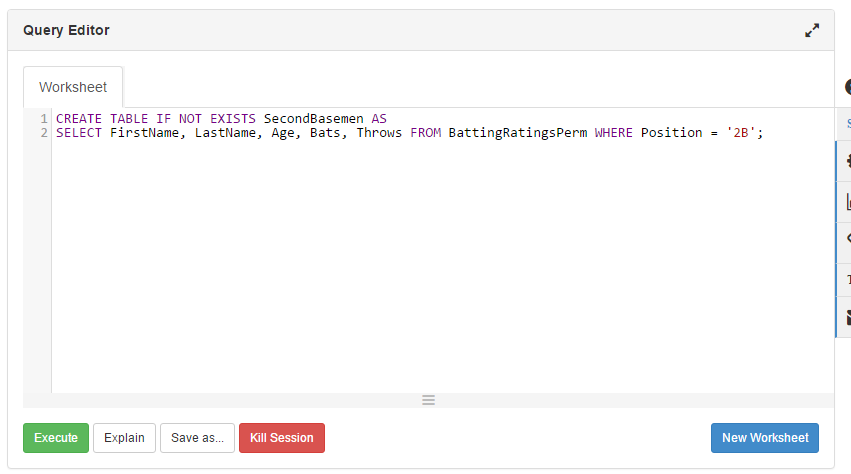

Sqoop

Create the SecondBasemen table.

Sqoop

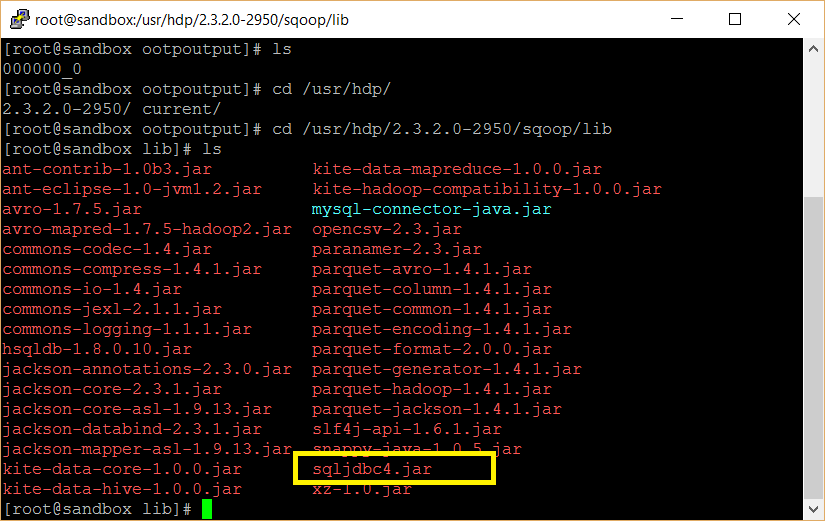

Grab the Microsoft JDBC driver and put into /usr/hadoop/[version]/sqoop/lib.

Sqoop

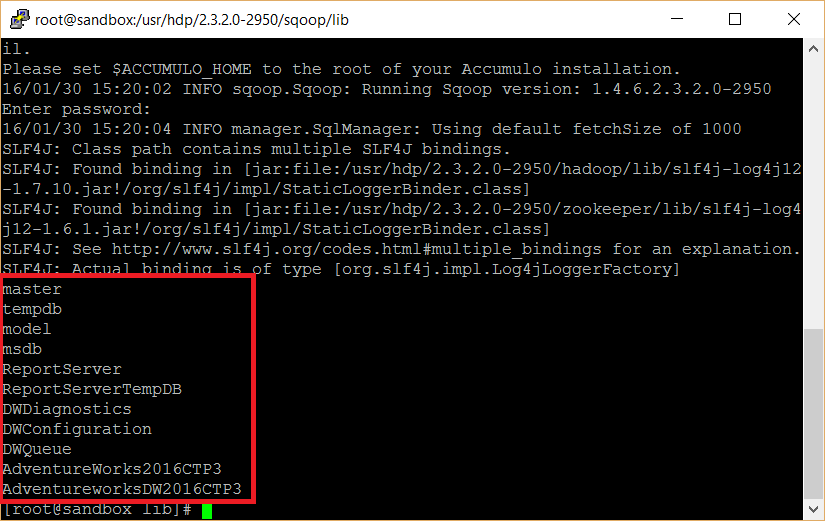

sqoop list-databases will list databases

Sqoop

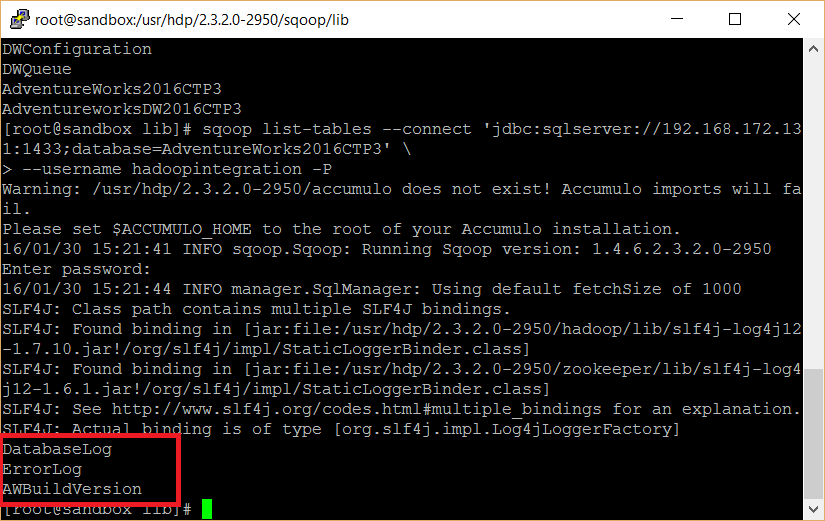

sqoop list-tables will list tables for the default schema

Sqoop

Other important options:

- sqoop import loads a table into Hadoop

- sqoop export writes a table to your RMDBS

Check out SqoopCommands.txt for examples on how to use these commands and more.

Integration Methods

- Ambari

- Sqoop

- .NET Program

- SSIS

- Linked Servers

- Polybase

.NET Program

If you install the Microsoft.Hadoop.MapReduce NuGet package, you can connect to a Hadoop cluster and perform file maintenance.

Our example will use FSharp.Data.SqlClient to insert rows into SQL Server.

.NET Program

When To Use

Use .NET when you have complex and custom data flows, or if most of your ETL infrastructure is in .NET.

.NET Program

Demo Time

Check out HDFSFileManagement.FSharp for the code.

Integration Methods

- Ambari

- Sqoop

- .NET Program

- SSIS

- Linked Servers

- Polybase

SSIS

Visual Studio + SQL Server Data Tools 2016 leads to good Hadoop integration.

SSDT 2016 includes HDFS file source and destination via WebHDFS or WebHCat.

If you're using older versions of VS or SSDT, you'll have limited access.

SSIS

When To Use

Use SSIS for Hadoop ETL if this is what you normally use for SQL Server ETL.

SSIS

Demo Time

Check out HadoopIntegration.SSIS for the code.

Integration Methods

- Ambari

- Sqoop

- .NET Program

- SSIS

- Linked Servers

- Polybase

Linked Servers

Linked servers let us connect external resources to SQL Server and query them like SQL tables.

Although the most common use case for linked servers is connecting other SQL Server instances, we can also tie in Excel, flat files, and even Hive tables.

Linked Servers

When To Use

Use linked servers to connect Hive and SQL tables together in queries, particularly before SQL Server 2016.

Linked Servers

Demo Time

Scroll down for image-heavy demo.

Linked Servers

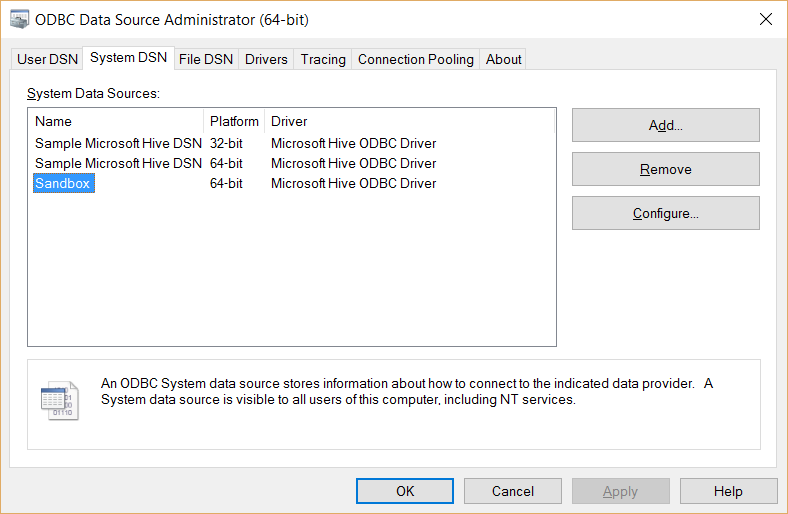

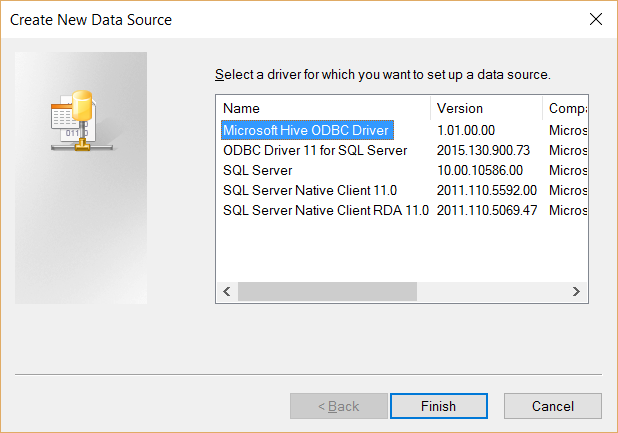

Grab the Microsoft Hive ODBC driver. Then, create a System DSN.

Linked Servers

Add a new DSN using the Hive ODBC driver.

Linked Servers

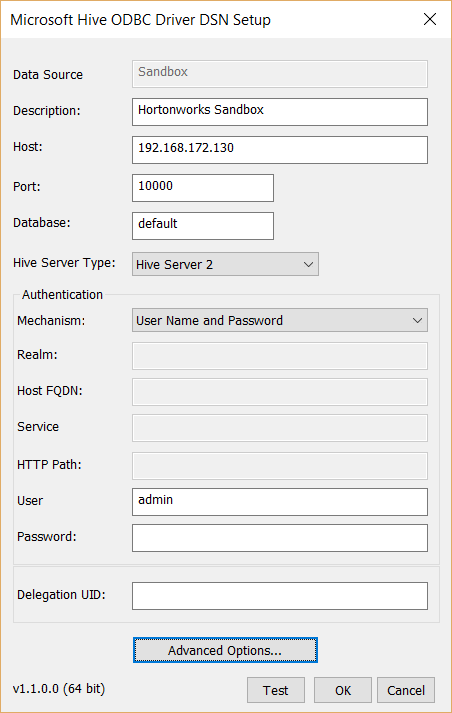

Configure the Hive DSN.

Linked Servers

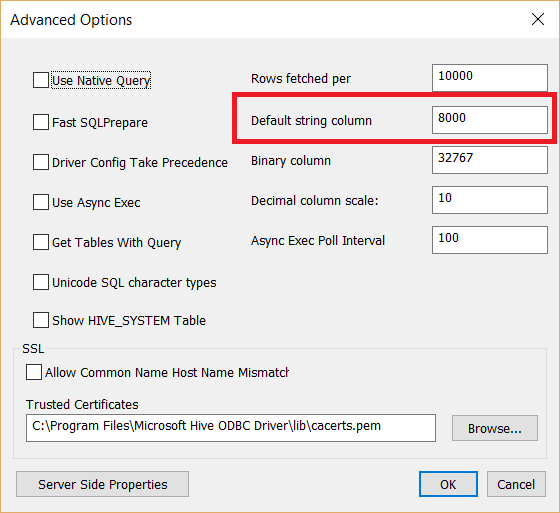

Make sure that "Default string column" is no more than 8000 bytes.

Integration Methods

- Ambari

- Sqoop

- .NET Program

- SSIS

- Linked Servers

- Polybase

Polybase

Polybase is Microsoft's latest Hadoop integration offering. First introduced in SQL Server 2012 PDW (now APS), it is now generally available in SQL Server 2016.

Why Polybase?

Polybase has several advantages over linked servers:

- Predicate pushdown

- Statistics on external tables

- Parallelized SQL Server (control and compute nodes for external table queries)

- Superior query analytics

Why Polybase?

Polybase is not perfect:

- MapReduce only: no Spark, no Tez

- Limited collation support (worse for non-US customers)

Polybase

When To Use

Use Polybase to join SQL tables to Hadoop data sets, particularly if you want to write T-SQL to query the data.

Polybase

Demo Time

Comparison Table

| Method | Good | Bad | Best Uses |

| Ambari | Easy to use | Manual processing | One-off moves |

| Sqoop | Easy to use; easy to automate | Cannot create SQL tables; limited query support | Staging table ETL; migrating into Hadoop |

| .NET | Powerful | Harder to write; finicky | Custom processing |

| SSIS | Good ETL patterns; integrates nicely | HDFS objects not (yet) complete; joining data is slow | Established ETL program |

| Linked Server | T-SQL syntax; LS common | Needs ODBC driver; can be slow; just Hive | Joining SQL data with Hive data |

| Polybase | T-SQL; Perf; Intellisense | Only MapReduce | Joining SQL data with HDFS data |

Wrapping Up

There are plenty of ways to integrate Hadoop with SQL Server. Mix and match methods as they suit you needs.

To learn more, go here: http://CSmore.info/on/hadoopsql

And for help, contact me: feasel@catallaxyservices.com | @feaselkl